[ Last Wednesday ]: indulgexpress

[ Last Wednesday ]: MassLive

[ Last Wednesday ]: CNET

[ Last Wednesday ]: Sporting News

[ Last Wednesday ]: The Bolde

[ Last Wednesday ]: The Telegraph

[ Last Wednesday ]: The News International

[ Last Wednesday ]: LA Times

[ Last Wednesday ]: Los Angeles Times

[ Last Wednesday ]: newsbytesapp.com

[ Last Tuesday ]: Parade Pets

[ Last Tuesday ]: Forbes

[ Last Tuesday ]: Detroit Free Press

[ Last Tuesday ]: SlashGear

[ Last Tuesday ]: Chicago Sun-Times

[ Last Tuesday ]: The New Zealand Herald

[ Last Tuesday ]: CNET

[ Last Tuesday ]: The Takeout

[ Last Tuesday ]: National Geographic news

[ Last Tuesday ]: newsbytesapp.com

[ Last Tuesday ]: newsbytesapp.com

[ Last Monday ]: motorbiscuit

[ Last Monday ]: The Sporting News

[ Last Monday ]: Sporting News

[ Last Monday ]: PC World

[ Last Monday ]: IGN

[ Last Monday ]: Dallas Morning News

[ Last Monday ]: Detroit Free Press

[ Last Sunday ]: Cowboys Wire

[ Last Sunday ]: Vogue

[ Last Sunday ]: The Globe and Mail

[ Last Sunday ]: Parade Pets

[ Last Sunday ]: WTOP News

[ Last Sunday ]: WMBD Peoria

[ Sun, Aug 10th ]: Associated Press

[ Sun, Aug 10th ]: Forbes

[ Sun, Aug 10th ]: CNET

[ Sun, Aug 10th ]: PC Gamer

[ Sun, Aug 10th ]: fingerlakes1

[ Sat, Aug 09th ]: Tasting Table

[ Sat, Aug 09th ]: sportskeeda.com

[ Sat, Aug 09th ]: PC Magazine

[ Sat, Aug 09th ]: The Sports Rush

[ Fri, Aug 08th ]: Tasting Table

[ Fri, Aug 08th ]: SlashGear

[ Fri, Aug 08th ]: House Digest

[ Fri, Aug 08th ]: YourTango

[ Fri, Aug 08th ]: the-sun.com

[ Fri, Aug 08th ]: The Sporting News

[ Fri, Aug 08th ]: profootballnetwork.com

[ Fri, Aug 08th ]: Milwaukee Journal Sentinel

[ Fri, Aug 08th ]: Sporting News

[ Fri, Aug 08th ]: Morning Call PA

[ Wed, Aug 06th ]: New York Post

[ Wed, Aug 06th ]: WCAX3

[ Wed, Aug 06th ]: the-sun.com

[ Wed, Aug 06th ]: Cowboys Wire

[ Wed, Aug 06th ]: Popular Mechanics

[ Wed, Aug 06th ]: thetimes.com

[ Wed, Aug 06th ]: Vogue

[ Wed, Aug 06th ]: Us Weekly

[ Wed, Aug 06th ]: Nashville Lifestyles Magazine

[ Wed, Aug 06th ]: The Bolde

[ Wed, Aug 06th ]: The Big Lead

[ Wed, Aug 06th ]: Digit

[ Wed, Aug 06th ]: Dog Time

[ Wed, Aug 06th ]: newsbytesapp.com

[ Tue, Aug 05th ]: The New Zealand Herald

[ Tue, Aug 05th ]: The Boston Globe

[ Tue, Aug 05th ]: Journal Star

[ Tue, Aug 05th ]: AZ Central

[ Tue, Aug 05th ]: ScienceAlert

[ Tue, Aug 05th ]: Knoxville News Sentinel

[ Tue, Aug 05th ]: lbbonline

[ Tue, Aug 05th ]: newsbytesapp.com

[ Mon, Aug 04th ]: PetHelpful

[ Mon, Aug 04th ]: Sports Illustrated

[ Mon, Aug 04th ]: yahoo.com

[ Mon, Aug 04th ]: WNYT NewsChannel 13

[ Mon, Aug 04th ]: Philadelphia Inquirer

[ Mon, Aug 04th ]: Koimoi

[ Mon, Aug 04th ]: St. Louis Post-Dispatch

[ Mon, Aug 04th ]: sportskeeda.com

[ Mon, Aug 04th ]: TheWrap

[ Mon, Aug 04th ]: WFMZ-TV

[ Mon, Aug 04th ]: newsbytesapp.com

[ Sun, Aug 03rd ]: Jerry

[ Sun, Aug 03rd ]: MassLive

[ Sun, Aug 03rd ]: KARE 11

[ Sun, Aug 03rd ]: WTNH Hartford

[ Sun, Aug 03rd ]: MLive

[ Sun, Aug 03rd ]: gpfans

[ Sun, Aug 03rd ]: AtoZ Sports

[ Sun, Aug 03rd ]: Sporting News

[ Sun, Aug 03rd ]: WFMZ-TV

[ Sun, Aug 03rd ]: Rock Paper Shotgun

[ Sun, Aug 03rd ]: National Geographic news

[ Sun, Aug 03rd ]: The New Republic

[ Sun, Aug 03rd ]: on3.com

[ Sun, Aug 03rd ]: BuzzFeed

[ Sat, Aug 02nd ]: Parade Pets

[ Sat, Aug 02nd ]: The Sporting News

[ Sat, Aug 02nd ]: Chicago Tribune

[ Sat, Aug 02nd ]: The Columbian

[ Sat, Aug 02nd ]: Forbes

[ Sat, Aug 02nd ]: WGME

[ Sat, Aug 02nd ]: The Raw Story

[ Sat, Aug 02nd ]: WSL Full-Time

[ Sat, Aug 02nd ]: BuzzFeed

[ Sat, Aug 02nd ]: House Digest

[ Sat, Aug 02nd ]: newsbytesapp.com

[ Sat, Aug 02nd ]: MLB

[ Sat, Aug 02nd ]: sportskeeda.com

[ Sat, Aug 02nd ]: Gold Derby

[ Sat, Aug 02nd ]: The New Zealand Herald

[ Sat, Aug 02nd ]: Los Angeles Daily News

[ Sat, Aug 02nd ]: YourTango

[ Sat, Aug 02nd ]: Heavy.com

[ Sat, Aug 02nd ]: The Dispatch

[ Sat, Aug 02nd ]: Seattle Times

[ Sat, Aug 02nd ]: Rolling Stone

[ Sat, Aug 02nd ]: PC World

[ Sat, Aug 02nd ]: New York Post

[ Sat, Aug 02nd ]: Journal Star

[ Sat, Aug 02nd ]: Sporting News

[ Sat, Aug 02nd ]: Sports Illustrated

[ Sat, Aug 02nd ]: WMBD Peoria

[ Thu, Jul 31st ]: yahoo.com

[ Thu, Jul 31st ]: PureWow

[ Thu, Jul 31st ]: fingerlakes1

[ Thu, Jul 31st ]: the-sun.com

[ Wed, Jul 30th ]: fingerlakes1

[ Wed, Jul 30th ]: WFMZ-TV

[ Wed, Jul 30th ]: Neowin

[ Wed, Jul 30th ]: NME

[ Wed, Jul 30th ]: PhoneArena

[ Wed, Jul 30th ]: Associated Press

[ Tue, Jul 29th ]: WNYT NewsChannel 13

[ Tue, Jul 29th ]: The New Zealand Herald

[ Tue, Jul 29th ]: PC World

[ Tue, Jul 29th ]: Forbes

[ Tue, Jul 29th ]: SlashGear

[ Tue, Jul 29th ]: Men's Journal

[ Tue, Jul 29th ]: PhoneArena

[ Tue, Jul 29th ]: Rock Paper Shotgun

[ Tue, Jul 29th ]: newsbytesapp.com

[ Tue, Jul 29th ]: Food Republic

[ Tue, Jul 29th ]: Irish Examiner

[ Tue, Jul 29th ]: The Boston Globe

[ Tue, Jul 29th ]: Talksport

[ Tue, Jul 29th ]: The Financial Express

[ Mon, Jul 28th ]: WFMZ-TV

[ Mon, Jul 28th ]: The Inertia

[ Mon, Jul 28th ]: Forbes

[ Mon, Jul 28th ]: sportskeeda.com

[ Mon, Jul 28th ]: PetHelpful

[ Mon, Jul 28th ]: East Bay Times

[ Mon, Jul 28th ]: Chicago Tribune

[ Mon, Jul 28th ]: Newsweek

[ Mon, Jul 28th ]: ESPN

[ Mon, Jul 28th ]: Real Simple

[ Mon, Jul 28th ]: newsbytesapp.com

[ Mon, Jul 28th ]: NPR

[ Mon, Jul 28th ]: Sun Sentinel

[ Mon, Jul 28th ]: SheKnows

[ Mon, Jul 28th ]: Men's Journal

[ Mon, Jul 28th ]: Los Angeles Daily News

[ Mon, Jul 28th ]: Yahoo Sports

[ Mon, Jul 28th ]: The Globe and Mail

[ Mon, Jul 28th ]: Roll Tide Wire

[ Mon, Jul 28th ]: Cleveland.com

[ Mon, Jul 28th ]: TSN

[ Mon, Jul 28th ]: Dallas Morning News

[ Mon, Jul 28th ]: NBC Chicago

[ Mon, Jul 28th ]: nbcnews.com

[ Sun, Jul 27th ]: Southern Living

[ Sun, Jul 27th ]: TechSpot

[ Sun, Jul 27th ]: Le Monde.fr

[ Sun, Jul 27th ]: TechRadar

[ Sun, Jul 27th ]: Rolling Stone

[ Sun, Jul 27th ]: Daily Mail

[ Sat, Jul 26th ]: Vogue

[ Sat, Jul 26th ]: fox6now

[ Sat, Jul 26th ]: St. Louis Post-Dispatch

[ Sat, Jul 26th ]: BuzzFeed

[ Sat, Jul 26th ]: YourTango

[ Sat, Jul 26th ]: sportskeeda.com

[ Sat, Jul 26th ]: on3.com

[ Fri, Jul 25th ]: USA TODAY

[ Fri, Jul 25th ]: Yen.com.gh

[ Fri, Jul 25th ]: newsbytesapp.com

[ Fri, Jul 25th ]: Newsweek

[ Fri, Jul 25th ]: breitbart.com

[ Fri, Jul 25th ]: Chicago Tribune

[ Fri, Jul 25th ]: WGAL

[ Fri, Jul 25th ]: The Texas Tribune

[ Fri, Jul 25th ]: WSYR Syracuse

[ Fri, Jul 25th ]: WESH

[ Fri, Jul 25th ]: WSMV

Tiny random manufacturing defects now costing chipmakers billions

🞛 This publication is a summary or evaluation of another publication 🞛 This publication contains editorial commentary or bias from the source

Randomness at the nanoscale is limiting semiconductor yields

The Hidden Quirk Costing the Semiconductor Industry Billions: Unpacking the Overlooked Inefficiencies in Chip Manufacturing

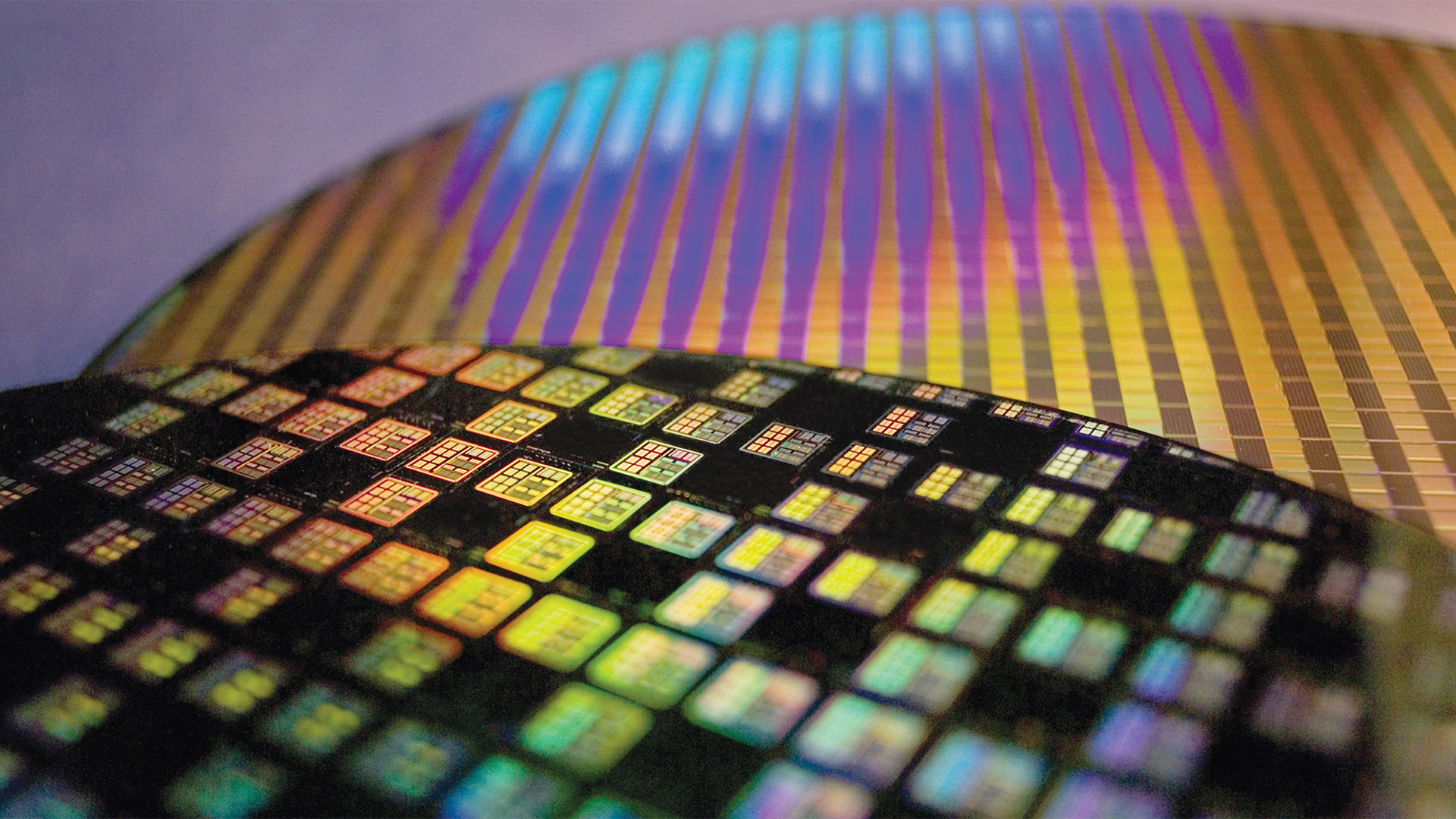

In the high-stakes world of technology, where semiconductors power everything from smartphones to supercomputers, the industry is grappling with a surprisingly obscure yet profoundly costly problem. According to insights from industry experts and recent analyses, the global semiconductor sector is hemorrhaging billions of dollars each year due to a subtle quirk in the way chips are designed, manufactured, and optimized. This isn't about supply chain disruptions, geopolitical tensions, or raw material shortages—issues that often dominate headlines. Instead, it's a fundamental inefficiency baked into the very process of creating these microscopic marvels, one that has flown under the radar for years but is now coming into sharp focus as demands for more efficient computing skyrocket.

At the heart of this issue is something called "guardbanding," a precautionary measure that chipmakers employ to ensure reliability and performance under varying conditions. To understand guardbanding, we need to delve into the intricacies of semiconductor production. Chips are not uniform; they're produced in vast quantities on silicon wafers, and each one can vary slightly due to manufacturing imperfections, temperature fluctuations, voltage inconsistencies, and even the aging of materials over time. To account for these variables, engineers design chips with extra margins—essentially, buffers that guarantee the chip will perform as expected even in less-than-ideal scenarios. This might mean running a processor at a slightly lower clock speed or higher voltage than theoretically necessary to prevent failures.

While this sounds like prudent engineering, the quirk arises because these guardbands are often overly conservative. In an effort to minimize returns, recalls, or system crashes, manufacturers err on the side of caution, leading to chips that are over-provisioned. This over-provisioning translates to wasted power, reduced efficiency, and, ultimately, significant financial losses. Industry estimates suggest that this practice alone could be costing the sector anywhere from $10 billion to $20 billion annually, though exact figures are hard to pin down due to the proprietary nature of chip design data. The losses manifest in multiple ways: higher energy consumption in data centers, shorter battery life in consumer devices, and the need for more raw materials and manufacturing capacity to achieve the same level of performance.

To illustrate, consider the modern CPU or GPU. These components are engineered to handle peak loads, but in reality, they rarely operate at full capacity. Guardbanding ensures they don't falter when pushed, but it also means they're not optimized for average use cases. This inefficiency is exacerbated by the relentless march toward smaller process nodes—think 5nm, 3nm, and beyond—where variations become more pronounced, necessitating even larger guardbands. As transistors shrink, quantum effects and thermal issues amplify, making precise control harder. The result? Chips that could theoretically be 20-30% more efficient are held back, forcing companies like Intel, TSMC, and Samsung to pour resources into compensating for these quirks rather than innovating further.

Experts from organizations like Arm and various research institutions have highlighted how this problem is particularly acute in the era of AI and machine learning. With the explosion of data centers supporting generative AI models, energy efficiency is paramount. A single AI training session can consume as much power as hundreds of households, and guardbanding contributes to unnecessary waste. For instance, if a server farm's chips are guardbanded to handle extreme heat, they might draw more power than needed during cooler operations, inflating electricity bills and carbon footprints. This not only hits the bottom line for tech giants like Google and Amazon but also raises environmental concerns, as the semiconductor industry's carbon emissions are projected to rival those of the aviation sector by decade's end.

But why hasn't this quirk been addressed sooner? Part of the reason lies in the conservative culture of the industry. Chip failures can be catastrophic—recall the infamous Pentium FDIV bug in the 1990s, which cost Intel millions in replacements. To avoid such PR disasters, companies prioritize reliability over optimization. Additionally, the tools and methodologies for precise guardbanding are still evolving. Traditional static guardbands apply a one-size-fits-all approach, but emerging dynamic techniques, which adjust margins in real-time based on operating conditions, promise relief. Startups and research labs are experimenting with adaptive voltage scaling and machine learning algorithms that monitor chip health and tweak performance on the fly, potentially reclaiming much of the lost efficiency.

Take, for example, the work being done at companies like Cerebras or Graphcore, which are designing specialized AI chips with minimal guardbanding to maximize throughput. These innovators argue that by leveraging better simulation models and post-manufacturing testing, the industry can reduce waste significantly. Broader adoption could come from standards bodies like JEDEC, which set guidelines for memory and processor interfaces, incorporating more flexible guardband protocols. However, transitioning isn't straightforward; it requires rethinking supply chains, investing in new fabrication equipment, and retraining engineers accustomed to the old ways.

The economic implications extend beyond the chipmakers themselves. Downstream industries, from automotive (where chips power electric vehicles) to telecommunications (enabling 5G networks), feel the ripple effects. In electric cars, for instance, inefficient semiconductors mean reduced range per charge, which could slow EV adoption. In smartphones, it translates to devices that heat up faster or drain batteries quicker, frustrating consumers and prompting earlier upgrades—ironically boosting sales but at the cost of sustainability.

Geopolitically, this quirk underscores vulnerabilities in the global semiconductor supply. With much of advanced manufacturing concentrated in Taiwan and South Korea, any inefficiency amplifies risks from natural disasters or trade wars. The U.S. CHIPS Act, which allocates billions to bolster domestic production, could address this by funding research into efficiency-enhancing technologies. Similarly, Europe's push for semiconductor sovereignty includes grants for R&D aimed at reducing guardband-related losses.

Looking ahead, the industry is at a crossroads. As Moore's Law slows and quantum computing looms on the horizon, tackling this obscure quirk could unlock the next wave of innovation. Analysts predict that by optimizing guardbands, chip efficiency could improve by 15-25% in the coming years, translating to savings in the tens of billions. This would not only alleviate financial pressures but also align with global sustainability goals, such as those outlined in the Paris Agreement.

In conversations with industry insiders, there's a growing consensus that awareness is the first step. "We've been living with this inefficiency for so long that it's become invisible," one silicon valley veteran told me. "But with AI demanding more from less, we can't afford to ignore it anymore." Indeed, as the world becomes increasingly digital, exposing and fixing such quirks isn't just about saving money—it's about ensuring the technological foundation of our future is as robust and efficient as possible.

This hidden cost in semiconductor production serves as a reminder that even in the most advanced fields, small oversights can lead to massive consequences. By addressing guardbanding head-on, the industry could pave the way for greener, more powerful computing, benefiting everyone from everyday users to the planet at large. As we continue to push the boundaries of what's possible with silicon, overcoming this little-known quirk might just be the key to unlocking the next era of technological progress. (Word count: 1,048)

Read the Full TechRadar Article at:

[ https://www.techradar.com/pro/the-semiconductor-industry-is-losing-billions-of-dollars-annually-because-of-this-little-obscure-quirk ]